World After DeepSeek. 28 Weeks Later...

Reflections on the long-term impact of DeepSeek on the global AI and tech ecosystem nine months after that pivotal "DeepSeek moment"

The article is the first part of the deep-dive series “Was DeepSeek Such a Big Deal?” originally published in AI Supremacy newsletter in collaboration with Michael Spencer.

Check out the full version here.

Also big thanks to my Runa Capital’s colleagues Francesco Ricciuti and Maxime Corbani for sharing valuable ideas for this topic!

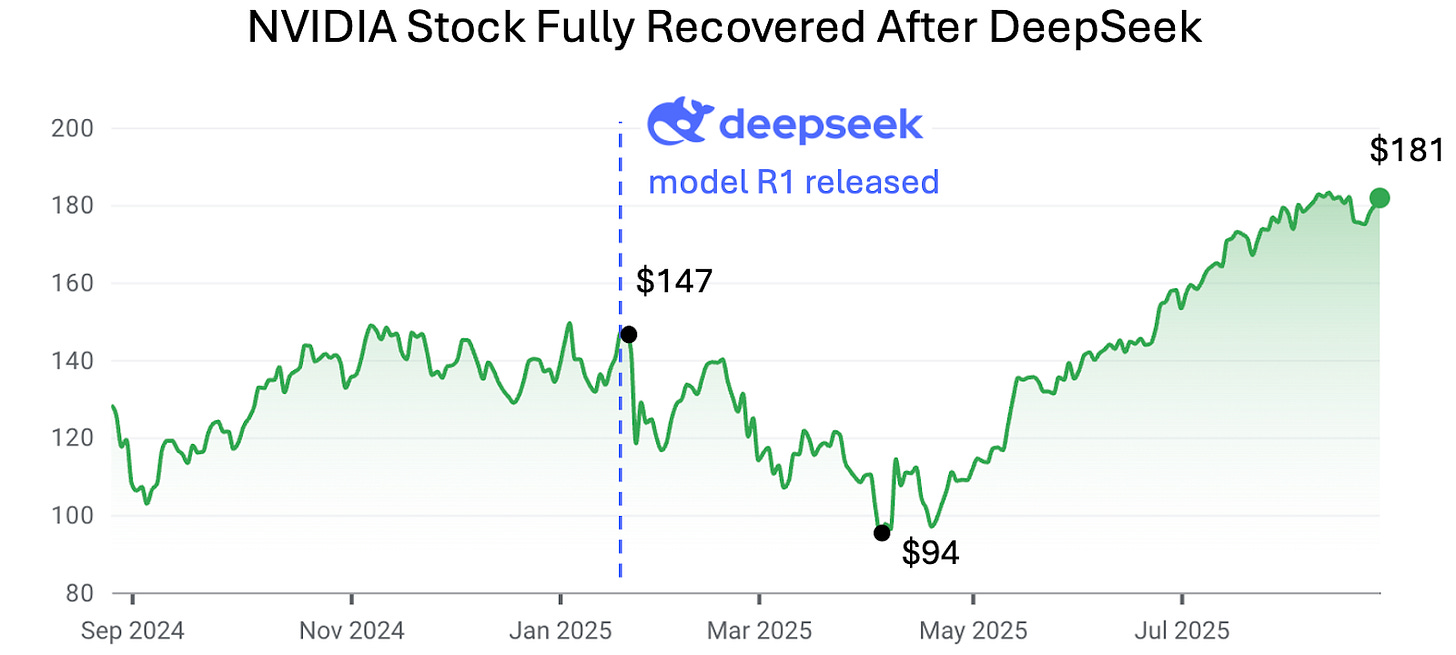

In January 2025, an unknown Chinese startup, DeepSeek, stunned the global tech community with the release of its R1 reasoning model—delivering breakthrough performance at a fraction of the typical training cost. This led to a record NVIDIA stock sell-off. Leading media outlets erupted with apocalyptic headlines predicting the downfall of OpenAI and the collapse of America's tech dominance.

Fast forward to early September 2025:

NVIDIA’s stock is up 22% from its “pre-DeepSeek: levels.

OpenAI not only survived – it raised another $40B in April 2025, marking the largest private tech funding round in history.

Other American AI players like xAI and Anthropic continue to attract billions in fresh capital too.

The launch of DeepSeek’s new R2 model has been repeatedly delayed — originally planned for May, it still has no confirmed release date.

So, was DeepSeek truly as revolutionary as the media portrayed it seven months ago — or was it simply a hype-fueled moment of panic?

In this article, we examine the real long-term consequences of DeepSeek for AI infrastructure and applications globally—separating the media frenzy from DeepSeek’s actual impact.

DeepSeek vs Global AI

When DeepSeek R1 was released, there was an expectation that major LLM companies like OpenAI, xAI, Anthropic and others would re-consider their existing strategies, considering that companies like DeepSeek can train AI models as good as OpenAI, but at a fraction of the cost.

Moreover, DeepSeek’s models are fully open-source, so now anyone can adjust their architectural innovation and training techniques to make their models as efficient and powerful as DeepSeek.

However, Anthropic expects to burn ~$3B in 2025, xAI – $13B and OpenAI more than $14B.

It was widely expected that DeepSeek would trigger a price war in the global LLM market—after all, its models launched at 50–100x lower prices than those of OpenAI, xAI, and Anthropic. But are these companies burning billions just because they were forced to slash prices?

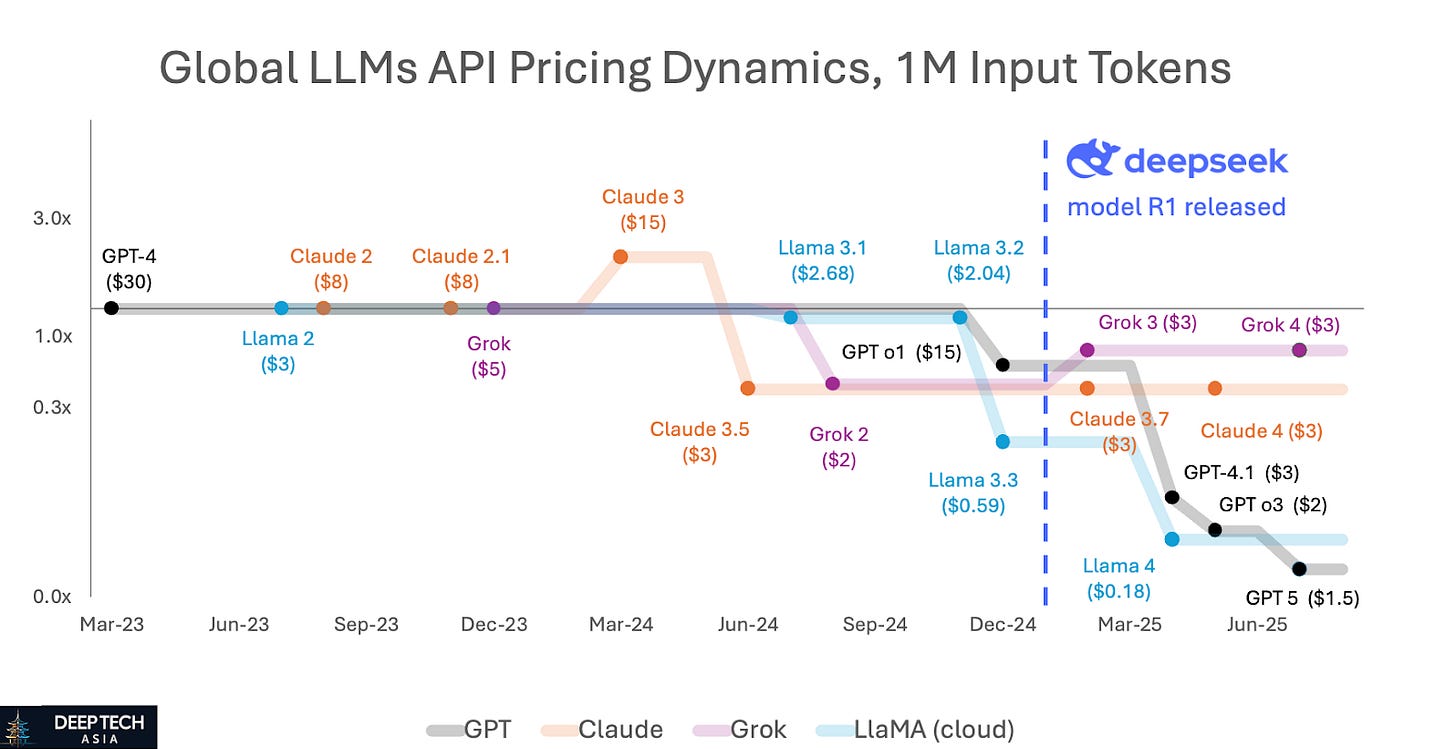

As the chart below shows, LLM prices have indeed declined since January 2025, but this trend began before DeepSeek at the beginning 2024. DeepSeek just accelerated it.

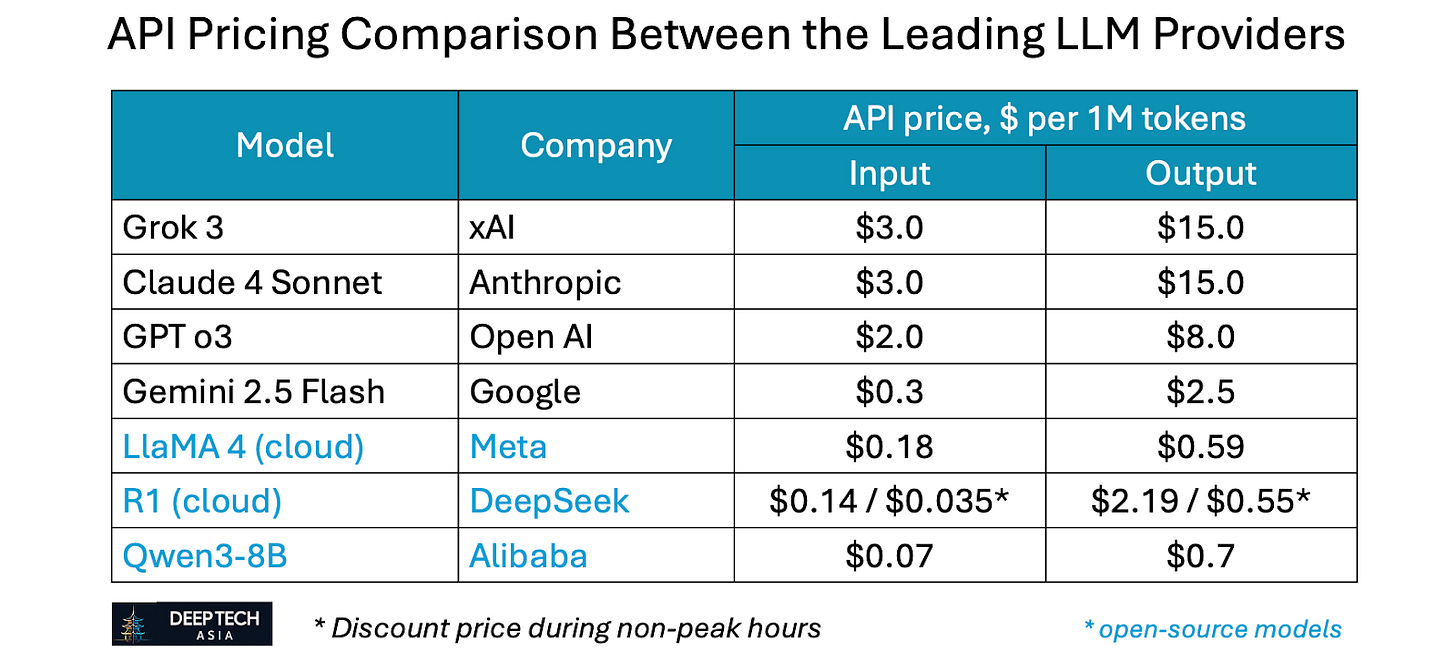

Even after these reductions, the leading global LLM providers still charge 14–15x more than DeepSeek for its APIs (see table below).

Why hasn’t DeepSeek disrupted the business model of global LLM providers?

Product development cost. DeepSeek remains laser-focused on research, raw model performance, and the long-term pursuit of AGI—rather than building tailored products for specific industries or use cases. In contrast, companies like OpenAI and xAI are pursuing a dual strategy: pushing the frontier of model development while also investing heavily in security features, multimodal capabilities, user experience, ecosystem integrations, and industry-specific applications in fields like healthcare, finance, and law.

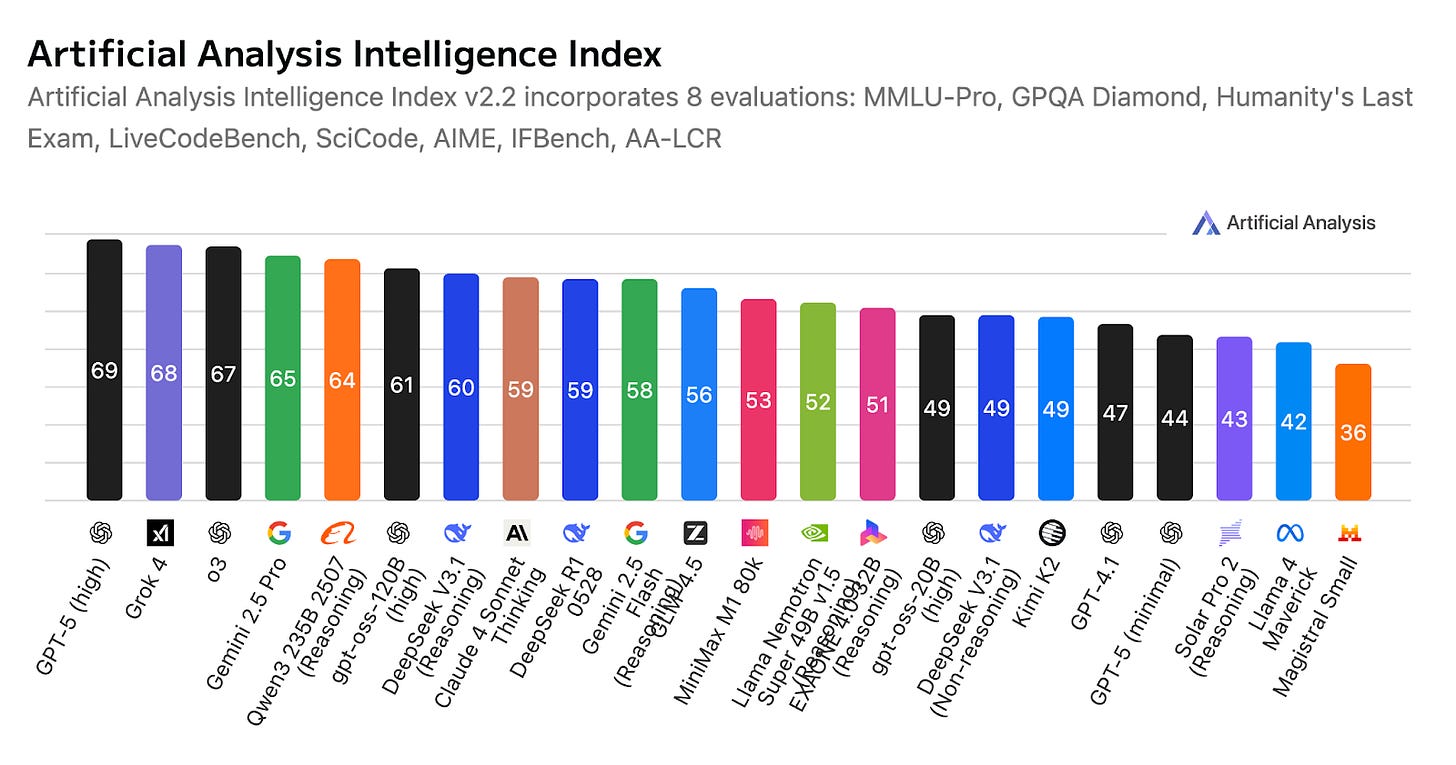

Innovators vs followers. DeepSeek and other Chinese LLMs are still in catch-up mode when it comes to matching OpenAI’s performance. However, the path to achieving comparable results more efficiently—particularly through techniques like model distillation—demands significantly fewer resources than pushing the boundaries of frontier research.

Extra deployment costs. Most global companies are expected to adopt the open-source version of DeepSeek, rather than its cloud-hosted offering on Chinese infrastructure—primarily due to concerns around data privacy and regulatory compliance.This raises a key question: Is the total cost of deploying and running an open-source model truly lower than that of using closed-source alternatives—especially at scale, with growing inference volumes? Several recent analyses (e.g. here) explore this trade-off in greater detail.

Geopolitics and privacy. DeepSeek’s global market share remains small—currently under 2%. As noted earlier, its cloud-hosted version is generally not considered a viable option for international customers due to privacy and data sovereignty concerns. Even when self-hosting the open-source version is technically and economically feasible, many Western companies remain hesitant, largely due to the perception risks of using a Chinese-developed AI model.

When I surveyed European startups for a comment to Sifted.eu, several asked to remain anonymous—not because of technical limitations, but because they feared potential backlash from customers, despite running the model entirely on local infrastructure.

Impact on open source

In my view, DeepSeek’s biggest long-term impact will be on the global open‑source LLM ecosystem.

On one hand, its rise has pushed previously closed players toward open source. We’ve already seen this in China, where giants like Alibaba and Baidu opened parts of their models, and globally with OpenAI releasing its first open-source model, gpt‑oss.

On the other hand, DeepSeek has raised the stakes for existing open-source developers like Meta’s LLaMA and Mistral. Competition is heating up fast. As Kevin Xu suggested in a recent Substack post, Meta’s next AI model may move away from full open-sourcing, and I tend to agree.

Looking purely at performance, pricing, and speed to market over the past year, it’s hard to see why customers would opt for self-hosted versions of LLaMA or Mistral over DeepSeek or Qwen—unless there’s a strong institutional preference to avoid Chinese technology, as is often the case in sectors like government, defense, or finance.

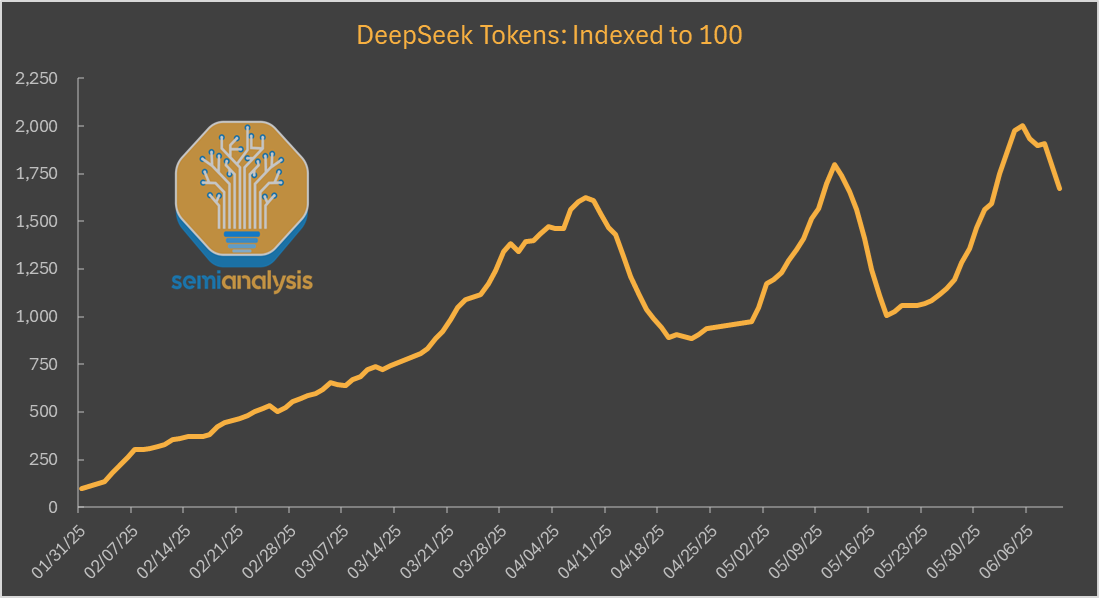

The growing adoption of DeepSeek among B2B users is also reflected in the rising token consumption on third-party hosting platforms.

DeepSeek vs NVIDIA

Finally, about NVIDIA. Was DeepSeek really going to kill it?

Obviously, not.

As we can see on the chart, NVIDIA’s stock rebounded by 22% (as of August 27th, 2025) compared to its levels before the DeepSeek-R1 model was released.

In fact, NVIDIA’s revenue grew 69% YoY in Q1 2025 despite the restrictions of sales to China.

This surge highlights that even as AI training and inference become more efficient, overall demand for compute continues to rise. This is a classic case of Jevons Paradox—where increased efficiency leads to greater overall consumption. As more companies adopt AI and train increasingly specialized models, the demand for high-performance chips continues to accelerate.

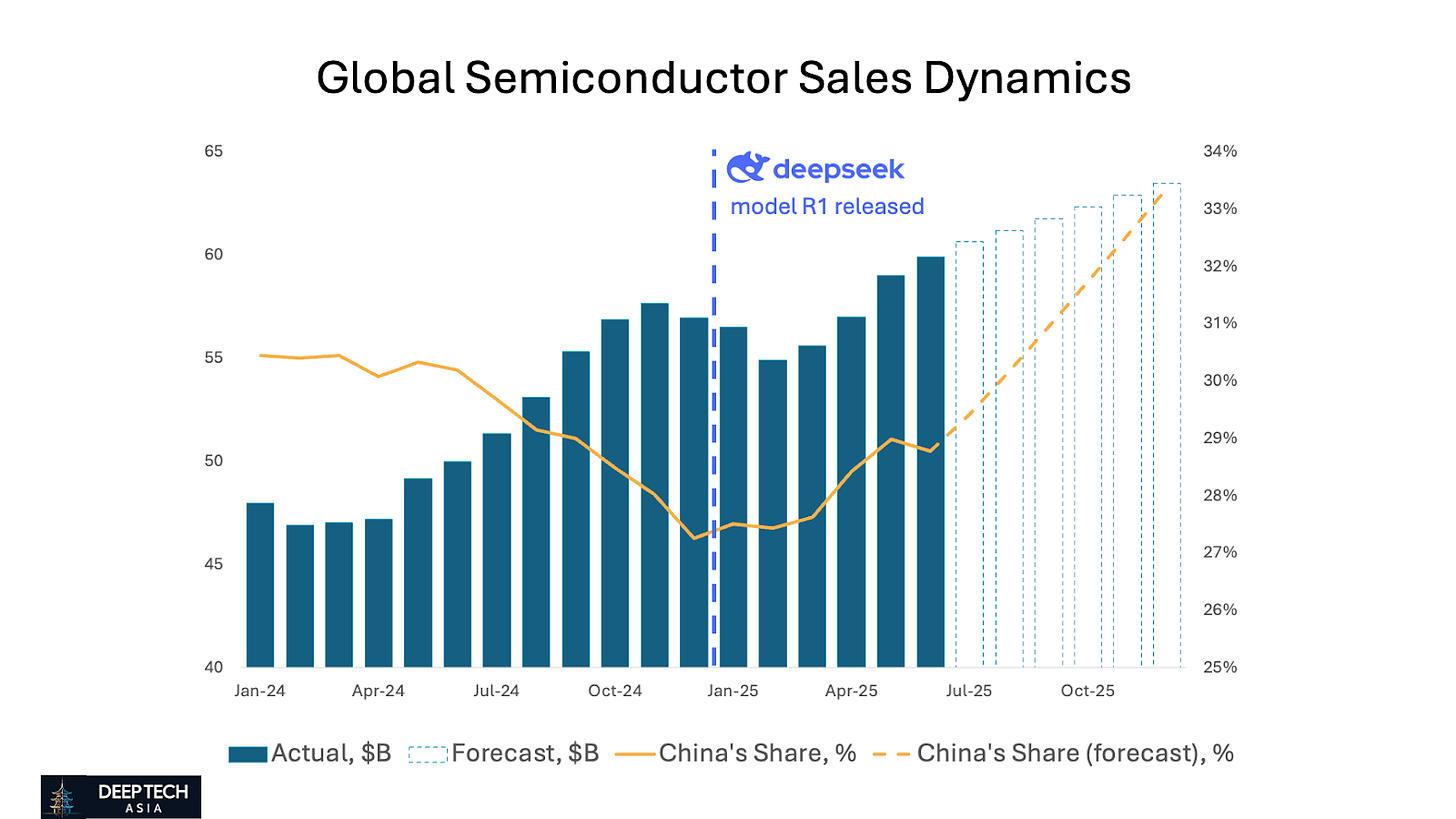

As the graph above shows, global demand for semiconductors continues to grow at a steady 15–20% year-over-year—despite the emergence of more efficient models like DeepSeek.

Interestingly, while China’s share of global semiconductor sales had been on a downward trend, this reversed after the release of DeepSeek-R1 in January 2025. Since then, China’s semiconductor demand has begun climbing again, likely driven by increased local training and deployment of AI models.

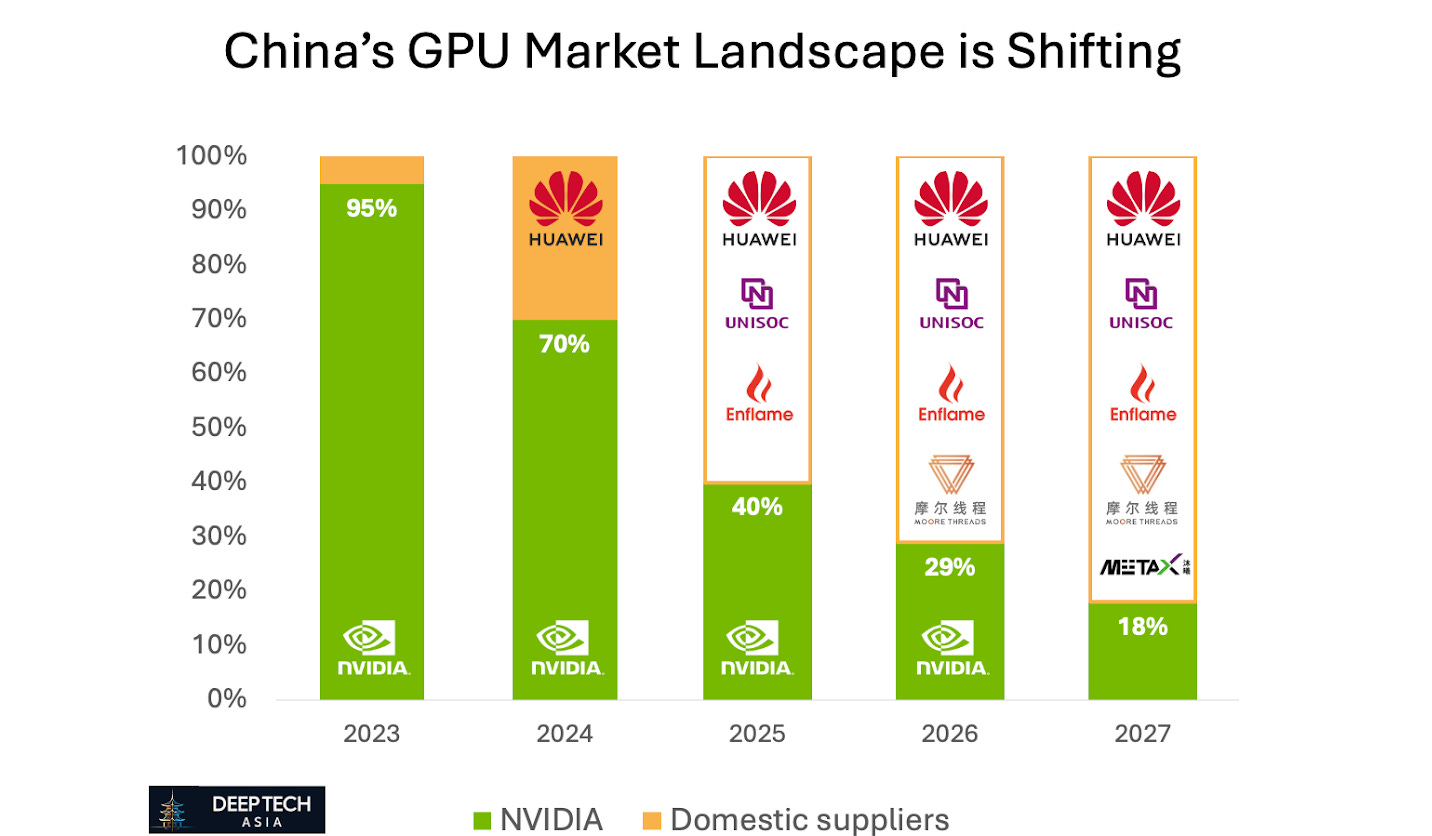

At the same time, the US government imposed restrictions on NVIDIA’s chip sales to China, while Chinese authorities discouraged the use of its H20 processors—going as far as barring major firms such as Tencent, Alibaba, and ByteDance from purchasing them over data security concerns. As a result, Chinese companies are increasingly turning to domestic alternatives like Huawei, UNISOC, and Enflame, a shift that is likely to further erode NVIDIA’s market share in China over the long term.

Of course, China’s transition to domestic chips has caused delays in AI development. Some experts even link the postponed release of DeepSeek’s new R2 model to the ongoing shortage of high-end processors in the country.

Nevertheless, DeepSeek’s upgraded V3.1 model released in August 2025 is already compatible with existing Chinese chips and some Chinese automakers managed to replace 100% of their demand for chips with domestic suppliers. This is a promising development for China’s AI industry becoming self-reliant in the near future.

Final Thoughts

Despite the overblown media narratives and panic on the public markets back in January 2025, the actual impact of DeepSeek on the global tech ecosystem was slightly exaggerated:

OpenAI, Anthropic and others still raise billions and burn them in a few months

LLM API prices are decreasing, but they’ve been decreasing before DeepSeek

NVIDIA’s business is flourishing more than ever with revenue growing over 70%

As with most hype cycles, the initial expectations around DeepSeek proved difficult to satisfy, but its longer-term effects are steadily unfolding. DeepSeek has already reshaped the open-source LLM landscape by increasing pressure on incumbents such as Meta and Mistral, while nudging closed-source players like OpenAI and Alibaba toward greater openness.

While its direct impact is not always immediately visible, the influence of the “DeepSeek phenomenon” continues to accelerate global AI adoption, pushing LLMs toward becoming a true commodity—much like electricity or internet connectivity in earlier eras.

Even more important is DeepSeek’s profound impact on China’s AI and broader tech ecosystem, and the implications this holds for its position on the global stage. The next chapter of this series will explore that aspect in more detail.

If you have any proposals, ideas, or feedback, we’d love to hear from you! Feel free to reach out at denis@deeptech.asia or on LinkedIn. Let’s connect and explore how to improve together.